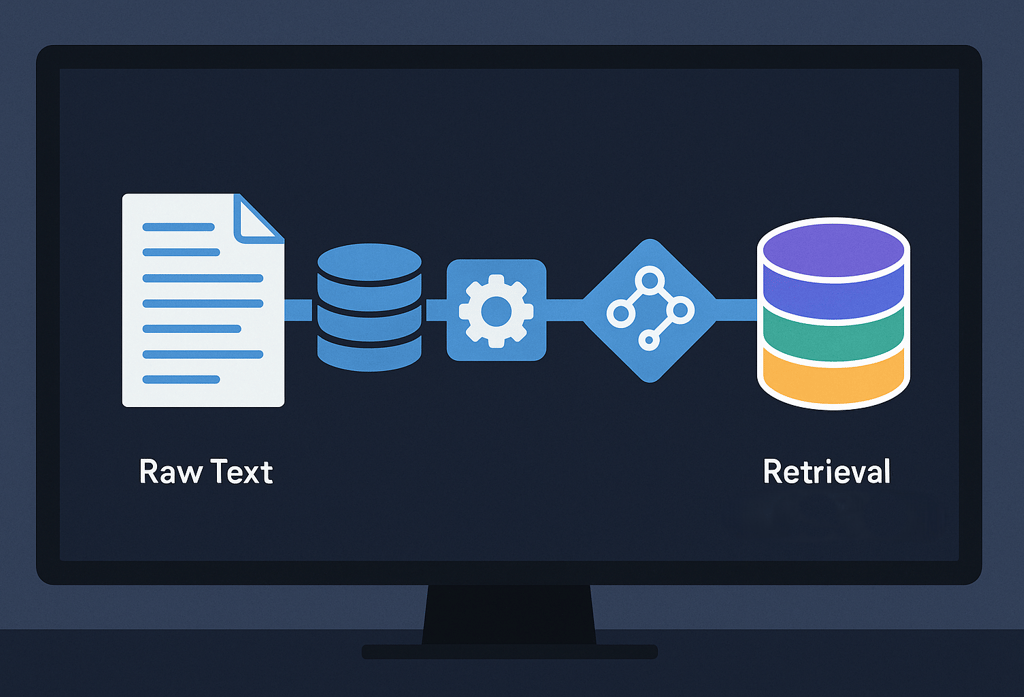

Data Pipelines for LLMs, From Raw Text to Retrieval-Ready Corpora

Master the complete data pipeline for LLM-powered retrieval systems. Learn essential techniques for ingestion, cleaning, de-duplication, chunking, and embedding that transform messy raw text into high-quality RAG corpora. Discover strategies for metadata enrichment and ongoing re-indexing that prevent the silent performance decay plaguing production systems.

11/4/20243 min read

The success of any Retrieval-Augmented Generation (RAG) system hinges not on the sophistication of your language model, but on the quality of the data pipeline feeding it. While developers rush to implement the latest embedding models and vector databases, they often overlook the unglamorous work of data plumbing—a mistake that inevitably surfaces when retrieval quality degrades over time.

Ingestion: The First Critical Junction

Data ingestion establishes the foundation for everything downstream. Modern enterprises pull information from disparate sources: cloud storage buckets, internal wikis, CRM systems, support tickets, and PDF repositories. The key is building connectors that preserve context. A document's metadata—creation date, author, department, and document type—proves just as valuable as its content during retrieval.

Smart ingestion strategies implement change detection mechanisms. Rather than re-processing entire datasets daily, mature pipelines track file modifications, API timestamps, or database change logs. This approach reduces computational overhead while ensuring fresh data flows continuously into your system.

Cleaning: Separating Signal from Noise

Raw text arrives laden with artifacts: HTML tags, navigation menus, footers, OCR errors, and formatting inconsistencies. Effective cleaning removes these without destroying semantic meaning. Regular expressions handle straightforward cases, but nuanced cleaning requires domain awareness. A financial document's tables contain critical data; a webpage's sidebar navigation does not.

Text normalization deserves careful consideration. Aggressive stemming or lemmatization can obscure technical terms or product names. The goal isn't linguistic purity—it's maximizing retrieval relevance. Preserve acronyms, maintain case sensitivity for proper nouns, and keep technical terminology intact.

De-duplication: The Hidden Performance Killer

Duplicate content poisons RAG systems subtly. When identical or near-identical chunks appear multiple times in your corpus, they dominate retrieval results, crowding out diverse, relevant information. Users receive repetitive responses, and compute resources are wasted on redundant embeddings.

Effective de-duplication operates at multiple levels. Exact duplicates get caught through content hashing. Near-duplicates require fuzzy matching—MinHash or SimHash algorithms efficiently identify substantial overlap. Don't forget cross-document duplication: the same paragraph often appears in multiple versions of policy documents or across related reports.

Chunking: The Art of Segmentation

Chunking strategy directly impacts retrieval precision. Chunks too large return excessive irrelevant context; chunks too small lose coherent meaning. The optimal size varies by use case, but 256-512 tokens serves as a reasonable starting point for most applications.

Semantic chunking outperforms naive character-splitting. Respect document structure: preserve complete sentences, maintain paragraph boundaries, and keep related concepts together. For technical documentation, keep code blocks with their explanatory text. For legal documents, never split mid-clause.

Implement overlapping windows—allowing adjacent chunks to share 10-20% of their content. This prevents critical information from being split at chunk boundaries, a common failure mode that degrades retrieval quality.

Embedding: Encoding Meaning

Embedding generation transforms text into numerical representations that capture semantic meaning. Model selection matters: domain-specific embedding models outperform general-purpose alternatives for specialized corpora. A model trained on medical literature better understands clinical terminology than one trained on general web text.

Batch processing dramatically improves throughput. Modern embedding APIs accept multiple texts per request—use this capability. Monitor embedding dimensions; higher-dimensional embeddings capture more nuance but increase storage and search costs. The 768-dimensional outputs from BERT-family models often suffice, while newer models push toward 1024 or higher.

Metadata Strategy: Context is King

Rich metadata transforms retrieval from keyword matching into contextual search. Store document source, publication date, author, and category alongside each chunk. This enables filtered search—users can constrain queries to specific time periods, departments, or document types.

Metadata also powers hybrid search strategies. Combining dense vector similarity with metadata filtering often outperforms either approach alone. A query about "Q3 financial results" benefits from semantic search within documents tagged with "finance" and "2024-Q3."

Ongoing Re-indexing: Fighting Data Rot

RAG systems decay without maintenance. Documents update, new information arrives, and outdated content persists. Implement scheduled re-indexing pipelines that refresh your corpus regularly—daily for rapidly changing data, weekly or monthly for stable content.

Version control for embeddings prevents catastrophic failures during model upgrades. When switching embedding models, maintain parallel indices temporarily. This allows rollback if new embeddings perform poorly and enables A/B testing of retrieval quality.

Monitor retrieval metrics continuously: track null results, user feedback, and semantic drift. These signals indicate when pipelines need adjustment or when corpus quality has degraded.

The pipeline from raw text to retrieval-ready corpus demands attention to unglamorous details, but these details determine whether your RAG system delivers value or frustration.