Regulation, Risk, and Responsible AI: What 2023 Means for LLM Governance

This blog gives an overview of LLM governance in 2023, covering emerging regulatory debates like the EU AI Act, US/UK policy directions, and the growing tension between data-hungry models and privacy rules. It explains key risks—hallucinations, bias, misuse—and outlines what responsible LLM deployment should look like in practice, from data governance and safety controls to human oversight and ongoing monitoring.

6/26/20234 min read

2023 isn’t just the year everyone discovered ChatGPT—it’s also the year governments, regulators, and companies realised they can’t treat Large Language Models (LLMs) as harmless toys anymore. When an AI can draft legal emails, generate code, and answer medical-style questions at scale, questions about law, liability, and responsibility stop being theoretical.

We’re now in the early phase of LLM governance: figuring out how to balance innovation with safety, privacy, and fundamental rights. Here’s what that landscape looks like in 2023.

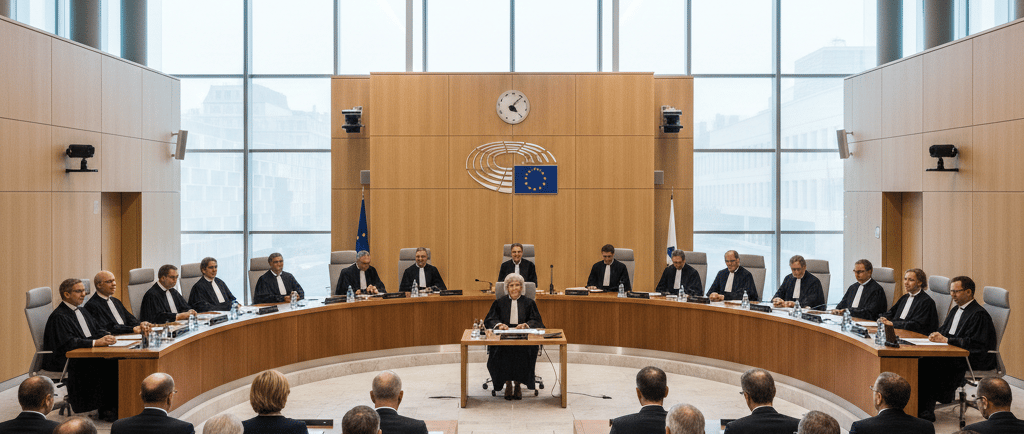

The EU AI Act: Risk-Based Rules Meet General-Purpose Models

In Europe, the EU AI Act is setting the tone for global debate. It’s a proposed regulation that classifies AI systems into risk categories—minimal, limited, high, and unacceptable—and applies stricter rules as risk increases.

Originally, the Act focused on specific use cases: credit scoring, hiring, law enforcement, biometric surveillance, and so on. But generative AI and LLMs forced a new question:

What do we do with general-purpose models that can be used almost anywhere?

By 2023, EU discussions had shifted toward:

Treating foundation models / general-purpose AI as a special category

Asking providers to meet transparency and safety obligations (e.g., documenting training data types, evaluating risks, reporting capabilities and limitations)

Considering additional requirements when these models are used in high-risk applications (like education, employment, critical infrastructure, health, or justice)

The EU’s approach is clear: you don’t just regulate the apps built on top; you also put obligations on the base models that power them.

US and UK: Principles, Guidance, and Soft Law (For Now)

Unlike the EU’s big, single regulation, the US and UK are leaning more toward guidelines and sector-specific rules—at least in 2023.

In the US, we see:

The AI Bill of Rights (blueprint) outlining principles like safety, nondiscrimination, data privacy, and human alternatives—but it’s not binding law.

Agencies (FTC, CFPB, EEOC, etc.) signalling they’ll apply existing laws (consumer protection, anti-discrimination, competition) to AI use.

Early congressional hearings and policy proposals focused on transparency, accountability, and the risks of deepfakes and misinformation.

In the UK, the government’s 2023 stance is a “pro-innovation” framework:

No single AI law yet

Regulators (like the ICO, FCA, CMA, etc.) are asked to interpret existing laws in light of AI

Emphasis on principles: safety, transparency, fairness, accountability, contestability

Both US and UK approaches share a theme: don’t freeze innovation with rigid rules too early, but make it clear that existing laws still apply and that regulators are watching.

Data Protection: Training Data, User Inputs, and “Who Owns What?”

LLMs highlight a big tension between data-hungry models and privacy rules like GDPR. Key questions in 2023:

Training Data:

Much of what models learn from comes from large-scale scraping of publicly available data.

Under GDPR and similar laws, individuals have rights over their personal data—even if it’s public.

This raises issues like: Was consent obtained? Can people request deletion if their data was used in training?

User Inputs:

When users paste emails, contracts, code, or personal info into a chat box, where does that data go?

Is it logged? For how long? Is it used to train future models?

Enterprise customers, in particular, are demanding strong guarantees: no training on their data, strict access controls, and clear retention policies.

Outputs and IP:

If a model outputs text similar to copyrighted material, who’s responsible?

Can generated content itself be copyrighted?

Ongoing lawsuits and debates in 2023 are starting to test whether training on copyrighted data is “fair use” or infringement.

For organizations, this means LLM deployment can’t be a side project. It must go through privacy impact assessments, legal review, and alignment with data protection obligations.

Risk: From Hallucinations to Harm

LLMs have a long list of failure modes:

Hallucinations – confident but wrong information

Bias and discrimination – reflecting skewed patterns from training data

Toxic output – hate speech, harassment, or unsafe advice

Misuse – generating phishing emails, scams, or harmful instructions

In a consumer playground, these are annoying or concerning. In an enterprise or public-sector setting, they can be legally and ethically catastrophic.

Regulators and organizations are increasingly focusing on:

Context and use case – The same model in a creative writing app vs. a medical triage assistant has very different risk profiles.

Human-in-the-loop – Where is human review mandatory? Who signs off?

Traceability – Can you explain how a decision was reached, or at least show what data and prompts were involved?

The emerging consensus: you can’t just ship an LLM and hope for the best. You need system-level safeguards: filters, monitoring, escalation paths, and clear disclaimers.

What Responsible LLM Deployment Should Look Like in 2023

For organizations, “responsible AI” is moving from slogan to checklist. A practical responsible LLM deployment in 2023 typically includes:

Clear Purpose and Boundaries

Define what the system is and is not allowed to do.

Avoid ambiguous “ask me anything” setups in high-risk contexts.

Data Governance

Classify data (public, internal, confidential, regulated).

Prohibit high-risk data from being sent to external models unless strict agreements are in place.

Use enterprise-grade options (no training on your data, strong security, regional hosting) where necessary.

Safety and Content Controls

Apply input and output filters to block disallowed content (self-harm, hate, explicit, illegal).

Use prompt-level constraints (“If you don’t know, say you don’t know”) to reduce hallucinations.

Human Oversight

Require human review for critical outputs (legal, medical, financial, HR decisions).

Treat LLMs as assistants, not decision-makers.

Transparency to Users

Make it clear when users are interacting with AI.

Include limitations, disclaimers, and how to seek human help.

Testing, Monitoring, and Feedback Loops

Build evaluation sets for your specific use cases.

Monitor for drift, bias, and unexpected behavior over time.

Provide easy ways for users to flag problematic outputs.

Policy and Training for Staff

Create internal guidelines: when AI can be used, for what, and with which data.

Train employees on both the power and the limits of these systems.

The Big Picture: Governance as a Moving Target

In 2023, LLM governance is very much a work in progress. Laws like the EU AI Act are still being finalised, courts are just starting to handle AI-related IP and liability cases, and regulators are learning in public.

For organizations, the safest stance is:

Assume more regulation is coming, not less.

Build internal practices that go beyond bare-minimum compliance.

Treat LLMs as part of your critical infrastructure, not just a shiny add-on.

We’re past the “cool demo” phase. The story of 2023 is that AI governance has arrived—messy, evolving, and unavoidable. The companies that thrive won’t be the ones who ignore it; they’ll be the ones who treat responsible AI not as a brake on innovation, but as the foundation that lets them deploy powerful models at scale without losing trust, breaking the law, or harming the people they’re meant to serve.